Left: Val d'Astino from Vai San Sebastiano, Bergamo. Right: Via Agliardi in Paladina, Bergamo.

Length: 14 km (8.7 miles)

Duration: 3 hours (excluding lunch)

Elevation: 500 m (1,640 ft)

Location: Italy, Lombardy, Bergamo - Paladina

As we have said many times in this blog, we walk to clear

our minds. And we walk to eat. And on this beautiful day, both of the objectives

are satisfied.

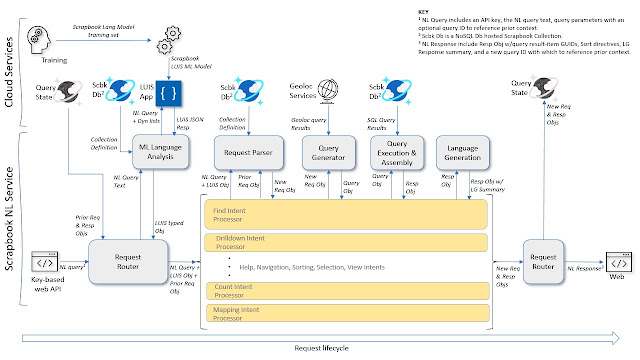

What we call the "backside of Bergamo" are the hills that extend out northwest from the upper city. It's a ridge that's mostly

wooded and crisscrossed with walking trails. When most people enter Bergamo

be in from the highway, train station, or airport, they see Bergamo and it's

upper city as viewed from the south. In that sense, the backside as we call it,

is quieter and less frequented by tourists. Of course, someone's backside

could be someone else's frontside. It's all a matter of perspective.

Today's eating objective is the elegant Osteria Scotti, located in Paladina, northwest of Bergamo. Walking, it took us about 1.5 hours to reach it. There

are many ways to get there. From the tip top of Bergamo at San Vigilio, we took

San Sebastiano to Via Fontana, to Via Madonna della Castagna. From the Santuario della Beata Vergine

della Castagna, you can walk on a trail or go straight to the osteria on

Via Sombreno.

Roundtrip from our location in citta bassa Bergamo, the walk

turned out to be about 14 km with elevation gain of about 500 m. (You can reduce

the elevation gain by choosing routes that stay low.)

The way back took us about 3 hours. What happened? We ran into friends and stopped to chat and then a quick pit stop at Forno Fassi Bakery for provisions. That's the beauty of walking, the unexpected encounters and small delights along the way.

About walking, we recently came across the Out of

Eden Walk project sponsored by National Geographic. The project is a

decade-long experiment in slow journalism following the journalist Paul

Salopek's 24,000-mile odyssey walking the pathways of the first humans who

migrated out of Africa all the way to South America.

The project resonated with us in that while we would never w/could

walk this far, we love walking and love experiencing the world at the speed of

walking: at 3 miles an hour. We love traveling and experiencing the world walking

even more so then by train, car, or even bike. We see and experience the world

more intimately walking. We always feel better after a good walk. And, in this

case, we had a great meal.

Left: A grand entrance on Via Madonna della Castagna.

Center: Via Colle dei Roccoli, Bergamo.

Right: Via Fontana, Bergamo.

Left: A roadside altar on Madonna della Castagna.

Center: Façade of the Parrocchia di Sombreno.

Right: House pattern on Via San Sebastiano, Bergamo.

Left: Via San Sebastiano, Bergamo.

Center: Espaliered beech trees - Osteria Scotti entrance.

Right: The sculpted hills of Bergamo along Via San Sebastiano with Monte Rosa in the distance.

Left: Trail extending from Via Agliardi in Paladina.

Center: A donkey on Via Sotto Mura di Sant'Alessandro.

Right: Dusk from Viale delle Mura, Bergamo. The end of our day and today's walk.

Left: Osteria Scotti - Amuse-bouche with chestnut cream.

Center: Osteria Scotti - Soffice di cavolfiore, uovo trasparente, salsa alle alici e crostini.

Right: Osteria Scotti - Zuppa di cipolle in crosta.

Left: Osteria Scotti - Sella di maialino cotta a bassa temperatura, riduzione al mirto e pure di finocchio.

Right: Osteria Scotti - Tataki di ricciola, insalata di salicornia, maionese alla nocciola. Ricciola is Greater Amberjack.

Left: Osteria Scotti - morbido alla farina di cocco e cioccolato fondente.

Right: Osteria Scotti - zabajone e gelato alla cannella.