Overview

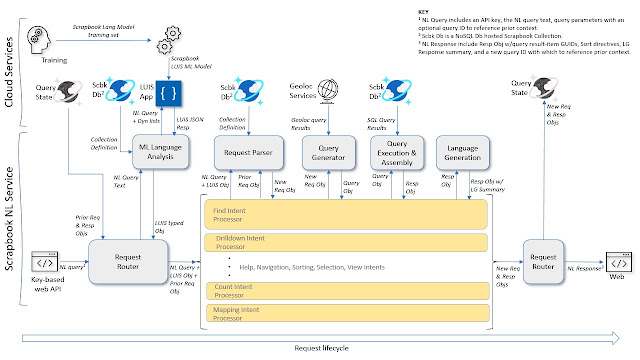

This is a diagram of our NL QueryEngine service. It illustrates how we process natural language queries (questions posed in plain English) that are passed into the QueryEngine API from our Scrapbook web application or bot service applications (MS Teams, Alexa). The QueryEngine service generates the appropriate actions and SQL language queries to return results requested from the user’s Scrapbook collection.

The diagram reads from left to right. At left, our API accepts an NL query object which includes the user’s request as natural language text (NL Query), an application key and other parameters. The NL query text is routed to the LUIS cognitive service app for evaluation against our ML trained language model to extract the relevant ‘intents’ and ‘entities’ that we’ve prepared our model to recognize and which we use to identify an action and to construct an appropriate SQL database query.

Depending on the inferred ‘intent’, NL Query processing is routed via a specific processing pipeline (labeled above as Intent Processors). Each NL query follows a similar process flow – Starting from the Request Router, ML language analysis, routing, request parsing, query generation, query execution, language generation (summarizing the query as interpreted and the results found), and finally back to the Request Router which assembles and returns a response. Query State allows for contextual or ‘follow-on’ queries.

Various Azure cloud services including LUIS and Cosmos DB are utilized in the processing pipeline (as seen in the upper part of the diagram.)

What are we writing about and why are we writing about it?

This post is about our work on a Natural Language (NL) parser for an Information Management Platform we developed called Scrapbook. We've covered Scrapbook in several previous posts (2017 introduction, 2019 our memex, 2021 user scenarios) and we continue in this post with a focus on how we deal with NL queries. By “NL query”, we mean a question or request posed in ordinary plain language as you would to another person. Users interact with the Scrapbook platform through any of various bot channels including MS Teams and Alexa, or via our Scrapbook web application. For example: “Tell me about walks we did in Greece last summer with Mary.”

How did we end up using NL in Scrapbook?

There were two major impetuses that drove our implementation of NL, the first of which emerged organically in our implementation of a bot service as a means to access the Scrapbook platform via chat experiences such as Alexa, MS Teams, Skype, and Messenger. These experiences all intrinsically imply some level of NL interaction, the most typical implementation of which is an interrogative or a “waterfall” style dialog. An example:

"What can I help you with?" => "I'm looking for books."

"In what date range?" => "In 2015."

"Title word?" => "science"

We felt this would be cumbersome as the Scrapbook data model allows for many dimensions, and the data itself spans a broad range of possible information domains. Forcing the user through a chain of questions to probe these dimensions is unwieldy and at best, tedious for the user. We sought a more conversational and fluid ‘natural language’ user experience: "Show me books I read in 2015 with science in the title".

In order to achieve this, we architected a query processing ‘engine’ that allows us to handle free-form user queries against the depth and breadth of Scrapbook’s collections and data models. We’ll explain later how this works.

The second major phase of NL query processing development was driven by our realization that the work we were doing with bots could be more broadly applied to Scrapbook searching in general, regardless of the app, and in particular, from our web application. This created an opportunity to completely separate the NL processing logic from the bot code within which it was originally developed, and to generalize it as a web service that can be called from any Scrapbook user experience whether via a bot channel, web app, mobile, or other.

We understood that as our data model and data itself became richer and more complex, it would be increasingly challenging and expensive to build and maintain forms-based query interfaces within the application. A further challenge was ensuring that the search experience remained intuitive and efficient to use. Forms-based query interfaces may be implemented as single or multiple search boxes with dropdowns and other standard controls to refine the search. These controls remain available in our Scrapbook web application. However, we found that the NL processing and query generation capability that we were implementing in the bot service had already begun to exceed that of our forms-based queries in the app, and was at once more powerful, faster and easier to use.

So, we added an NL query option in the Scrapbook web application that called our newly generalized NL Query Engine, now exposed as a web service. Natural Language querying became almost immediately the go-to search experience in Scrapbook and has continued to evolve in both capability and robustness.

The NL Query Engine, how does it work?

Referring back to the diagram above, the first step in the NL Query Engine processing chain is ML (Machine Learning) Language Analysis, which is called from the Request Router and leverages Microsoft’s LUIS (Language Understanding Intelligence Service) in Azure.

The LUIS service accepts a training model, which is essentially a structured document containing a large number of labeled utterances (sample NL phrases) that we anticipate receiving and that we want our application to recognize and handle intelligently. To enhance ML training and to boost recognition performance, we associate a combination of machine learned, built-in, static and dynamic features, as well as sentence patterns. Once the ML training has been completed and verified, a Scrapbook ML ‘app’ is published on LUIS as a service that we call from our NL Query Engine to analyze Scrapbook user input. We perform this training and publishing process iteratively, both to introduce new functionality as well as to improve recognition performance. The Scrapbook LUIS ML app returns a JSON object which contains the intent and a set of entity features inferred from the input phrase. Depending on the returned LUIS intent and query context, the Request Router directs program flow to the appropriate ‘Intent Processor.’

The business logic within each Intent Processor may differ, but the query processing flow is always the same, passing next to the Request Parser, then to the Query Generator, Query Execution, and finally back to the Request Router.

The Request Parser accepts a LUIS object containing the intent and entities identified in the user’s NL query, a Collection Definition object enumerating the specific categories, subcategories and synonyms associated with the Scrapbook collection being queried, and optionally, a prior Request Object that we use to facilitate contextual parsing. The Request Parser extracts and builds what we call a Request Object. The Request Object holds all of the query terms and parameters that are needed to generate the actual database query. These include category, subcategory, date-time, location, field, text string, negation, sorting, and various selection parameters.

The query, “Show me wines from France in 2018 with a rating of at least 3” for example, is routed after ML Language Analysis to the ‘Find Intent ‘processor from which the Request Parser extracts the following elements and parameters:

- Category: ‘drink’

- Subcategory: ‘wine’

- Geolocation: ‘({location: france}, {type: countryRegion})’

- DateRange: ‘({1/1/2018 12:00:00 AM}, {1/1/2019 12:00:00 AM})’

- QueryTerm: ‘with’

- Field: ‘rating’

- Text: ‘at least 3’

- Category: AND ( STRINGEQUALS(c.category, "Drink", true) )

- Subcategory: AND ( (RegexMatch(c.bodyObj["type"], "^(|.*,)(|\\s)wine(,.*|)$", "i")) )

- GeoLocation: AND ST_WITHIN(c.geoLocation, {"type":"MultiPolygon","coordinates":[[[[…]]]] } )

- DateRange: AND ( c.itemDate >= "2018-01-01T00:00:00" AND c.itemDate < "2019-01-01T00:00:00" )

- SearchString: AND StringToNumber(c.bodyObj["rating"]) >= 3

We generate natural language fragments along the way that ‘restate’ the query as it was interpreted by our processing, and once the query has been executed, we summarize the results returned from the database whether successful or not. “Here are the 6 items found in category Drink of type Wine within France between 2018 and 2019 with a rating >= 3.”

The Intent Processor returns control to the Request Router along with the new Request and Response objects.

Finally, the Request Router saves the Request and Response objects to state memory as context for possible follow-on queries, and returns the results to the calling application as an NL Response object.

So to recap, how do we search for information in Scrapbook?

Users have two options:

- Natural Language requests (default) – submitted from our Scrapbook web application, a web bot, or other channels including Alexa and MS Teams – are translated as described above by our NL Query Engine into SQL queries, which are run against the active Scrapbook collection stored in Cosmos DB. Search results are presented according to the application or channel. For an Alexa Spot device for example, the results are spoken. Requests such as to switch collections, change views, select a specific result, explore relationships or to ask for help are also supported via natural language.

- Form-based searches – as is common in many web or console applications – are translated into LINQ queries which are run against the active Scrapbook collection stored in Cosmos DB (LINQ is a programming model abstraction for querying data. The LINQ syntax is translated behind the scenes into SQL by the Cosmos DB API.)

Background

What is natural language processing (NLP)?

- NLP is what computers need to do to interpret human language, how to process natural language into a meaningful action or result.

- For example, in Scrapbook we can ask "Show me hikes we did in 2016 near Cortina d'Ampezzo". NLP is the interpretation and processing of this sentence to return a set of results that satisfy the user’s question.

- Equally important, Scrapbook generates a natural language response describing what it did and what it found, in language easily understood by the user whether displayed or spoken.

What is Language Understanding (LUIS)?

- Azure LUIS is a cloud-based conversational AI service that applies custom machine-learning intelligence to a user's conversational, natural language text (called an ‘utterance’) to predict and score an overall intent, and to extract and label relevant, detailed information within the text.

- An utterance is textual or spoken input from the user, the user’s question or query.

- In Scrapbook for example, an utterance might be "Show me album covers from the 1980s with the keyword ‘hair’."

- We create an ‘application’ in LUIS by defining a model. Within the model we identity the features we want to recognize. There are two principal feature categories - intent and entity.

- An intent may be a task or action that the user wants to perform.

- For example, in Scrapbook, our intents are "find", "drilldown", "map", "count", “related”, “collections”, “sort”, "help", "debug", "select", among others.

- In the query "What are lunches we’ve had nearby that we’ve rated at least 4?", the intent is "find".

- Our Scrapbook ML model currently distinguishes 18 intents.

- Entities are specific features that we train our LUIS application recognize within the utterance, akin to the parts of a sentence.

- In the query "Show me wines we had from Italy last year with the variety primitivo", the entities we recognize are "datetime (last year)", "geography (Italy)", "category (drink)", "subcategory (wine)", "field (variety)", and "text (primitivo)".

- Our ML model entities include category, subcategory, text, number, dimension, ordinal, parameter, query type (with, by, …), nearby, location, geolocation, datetime, and query object (when not a category).

- We currently recognize 19 entities and roles.

- Our Scrapbook ML model in LUIS uses a combination of built-in entities such as DateTime, machine-learned entities such as location or text, fixed lists, and dynamic lists. Category and SubCategory are examples of dynamic-list entities that we pass into the model via the API with the utterance. We do this because each Scrapbook collection has its own category definitions. This strategy allows us to achieve excellent category entity recognition across multiple collections. For examples of different collections, see the post 2021 user scenarios.

- We refine our LUIS ML model by adding or modifying training utterances, patterns and lists in a language understanding (.lu) format file which once uploaded to our LUIS Conversation App in Azure, is used to train a new instance of our model. Once the updated model passes acceptance testing, we publish it into production.

- Cosmos DB is a managed NoSQL database service that stores documents in collections that can be queried using standard SQL query language syntax. Each "item" in a Scrapbook collection is stored as a Cosmos DB document.

- Cosmos DB is a schema-free database, which means we structure or model the data as we need for the domain of the collection it represents (see the data model),

- Structure Query Language (SQL) queries are how we query our collections in Cosmos DB

- Platform / Collection / Item

- Scrapbook collections are maintained in distinct Cosmos DB database collections.

- A Scrapbook item is stored as a Cosmos DB document within a collection.

- The Scrapbook platform supports one or more collections.

- A Scrapbook collection has one or more items. For reference, our collections have thousands of items.

- Item / Category / Subcategory (or type) & Fields

- Scrapbook items have mandatory id, datetime, and category fields. All other fields are optional depending on the category definition. Items are organized principally by category.

- Each category may have one or more subcategories and synonyms to enhance collection organization, flexibility and querability. For example, Category ‘activity’ might have ‘run’ as a subcategory, and ‘jogging’ as a synonym for ‘run’.

- Each category has an associated set of fields that further define it. The category ‘book’ for example, would have an author field, which might not be used in other categories.

User Query Examples

"Show me hikes"

SELECT c.id, c.itemDate FROM c

WHERE c.type = "scrapbookItem"

AND ( STRINGEQUALS(c.category, "Activity", true) )

AND ( ( RegexMatch(c.bodyObj["type"], "^(|.*,)(|\\s)hike(,.*|)$", "i") ) )

ORDER BY c.itemDate DESC

SELECT c.id, c.itemDate FROM c

WHERE c.type = "scrapbookItem"

AND ( STRINGEQUALS(c.category, "Travel", true) )

AND (c.itemDate >= "2020-01-01T00:00:00" AND c.itemDate < "2021-01-01T00:00:00")

AND ST_WITHIN(c.geoLocation, {"type":"Polygon","coordinates":[[[9.2592,44.6784], …, [9.2592,44.6784]]]})

ORDER BY c.itemDate DESC

"Show me wines rated greater than 3 from France"

- followed by “What about red wines?”

- followed by “What about from Napa Valley?”

SELECT c.id, c.itemDate FROM c

WHERE c.type = "scrapbookItem"

AND ( STRINGEQUALS(c.category, "Drink", true) )

AND ( ( RegexMatch(c.bodyObj["type"], "^(|.*,)(|\\s)wine(,.*|)$", "i") ) )

AND StringToNumber(c.bodyObj["rating"]) > 3

AND ST_WITHIN(c.geoLocation, {"type":"Polygon","coordinates":[[[2.65916,42.34262] …, [2.65916,42.34262]]]})

ORDER BY c.itemDate DESC

SELECT c.id, c.itemDate FROM c

WHERE c.type = "scrapbookItem"

AND ( STRINGEQUALS(c.category, "Drink", true) )

AND ( ( RegexMatch(c.bodyObj["type"], "^(|.*,)(|\\s)red(,.*|)$", "i") ) )

AND StringToNumber(c.bodyObj["rating"]) > 3

AND ST_WITHIN(c.geoLocation, {"type":"Polygon","coordinates":[[[2.65916,42.34262] …,

[2.65916,42.34262]]]})

ORDER BY c.itemDate DESC

SELECT c.id, c.itemDate FROM c

WHERE c.type = "scrapbookItem"

AND ( STRINGEQUALS(c.category, "Drink", true) )

AND ( ( RegexMatch(c.bodyObj["type"], "^(|.*,)(|\\s)red(,.*|)$", "i") ) ) AND StringToNumber(c.bodyObj["rating"]) > 3

AND ST_WITHIN(c.geoLocation, {"type":"Polygon","coordinates":[[[-122.29542286330422,38.26322398466052],[-122.29542286330422,38.25549854951917],[-122.28230540818015,38.25549854951917],[-122.28230540818015,38.26322398466052],[-122.29542286330422,38.26322398466052]]]})

ORDER BY c.itemDate DESC

SELECT c.id, c.itemDate FROM c

WHERE c.type = "scrapbookItem"

AND NOT ( ( RegexMatch(c.bodyObj["type"], "^(|.*,)(|\\s)museum(,.*|)$", "i")

AND IS_DEFINED(c.bodyObj["type"])) )

AND ( ST_DISTANCE(c.geoLocation, {'type': 'Point', 'coordinates':[current lon, lat]}) < 100 )

ORDER BY c.itemDate DESC

- followed by "Show me a list"

SELECT VALUE COUNT(1) FROM c

WHERE c.type = "scrapbookItem"

AND ( STRINGEQUALS(c.category, "Book", true) )

AND (c.itemDate >= "2022-01-01T00:00:00" AND c.itemDate < "2023-01-01T00:00:00")

SELECT c.id, c.itemDate FROM c

WHERE c.type = "scrapbookItem"

AND ( STRINGEQUALS(c.category, "Book", true) )

AND (c.itemDate >= "2022-01-01T00:00:00" AND c.itemDate < "2023-01-01T00:00:00")

ORDER BY c.itemDate ASC

"Show me hikes last year except with Roberto"

- followed by "Show me a map"

SELECT c.id, c.itemDate FROM c

WHERE c.type = "scrapbookItem"

AND ( STRINGEQUALS(c.category, "Activity", true) )

AND ( ( RegexMatch(c.bodyObj["type"], "^(|.*,)(|\\s)hike(,.*|)$", "i") ) )

AND (c.itemDate >= "2021-01-01T00:00:00" AND c.itemDate < "2022-01-01T00:00:00")

AND NOT (CONTAINS(c.bodyObj["who"], "roberto", true) AND IS_DEFINED(c.bodyObj["who"])) )

ORDER BY c.itemDate DESC

“Show me a map” results in a ‘map’ intent which we interpret as a command to display a map of results.

"Find me books of type reference"

Here's the SQL from the NL query:

SELECT c.id, c.itemDate FROM c

WHERE c.type = "scrapbookItem"

AND ( STRINGEQUALS(c.category, "Book", true) )

AND ( ( RegexMatch(c.bodyObj["type"], "^(|.*,)(|\\s)reference(,.*|)$", "i") ) )

ORDER BY c.itemDate DESC

In the Scrapbook web application, we can also search via form-based web controls which generate a LINQ query that in turn translates into the following similar SQL:

SELECT VALUE root FROM root

WHERE (((true AND CONTAINS(LOWER(root["bodyObj"]["type"]), "reference"))

AND (LOWER(root["category"]) = "book"))

AND (root["type"] = "scrapbookItem"))

ORDER BY root["itemDate"] DESC

No comments:

Post a Comment

All comments are moderated. If your comment doesn't appear right away, it was likely accepted. Check back in a day if you asked a question.