. To understand what that means let's talk about some of the common information management tools we use each day. There are file storage services such as OneDrive, iCloud, and Dropbox, which we use to help manage photos and files, and email services like Gmail or Outlook, which we use to organize email, contacts, and calendar events. Facebook, LinkedIn, Instagram, and TikTok social network services that facilitate communication and interaction between friends an contacts via content and personal timelines. Twitter and blogging are further examples of information sharing platforms through, which we relate what we're doing and what we've been thinking about. And, let's not forget the numerous offerings for personal wikis and journaling software.

All of these platforms manage your personal information in one form or another. Each has strengths and weaknesses and attempts to address a part of the personal information management problem. Scrapbook doesn't aim to replace any of these tools. In fact, Scrapbook can interface with these tools. That's nice you say, but then how is Scrapbook different? We created Scrapbook to deal with four concerns that we felt are not yet adequately addressed:

Archival data is information that we don't need immediate access to, but may wish to review in the future. It includes, but is not limited to, year-end financial statements, old health records, newspaper clippings, postcards, brochures, tickets, invitations, and letters. Archival data is all of that physical paperwork that ends up in over-stuffed folders in drawers or file cabinets. Think of the times you needed to find something searching page by page through one bulging folder after another to finally locate (or not) what you were looking for. If your archival data happens to be already digitized, it's likely stored somewhere on a drive or in the cloud, but finding it can be a trick. The idea of not being able to locate important information at some point in the future bothered us, and we set out to address it with Scrapbook.

For years, we saved things like playbills of theater shows, mementos of places we visited, articles cut out of the paper, labels from products we liked, and other tidbits of information. We pasted the scraps of paper into blank notebooks, which eventually filled up. Our "scrapbooks" grew in number over the years. We noticed that we rarely referred back to these physical scrapbooks in large part, because except by chronological order, it was hard to find anything. You can think of Scrapbook, in part, as a digital version of the physical scrapbooks in which we preserved these scraps of information, but far more accessible and fun.

Left: Physical Scrapbook Page from 2002. Right: Physical Scrapbook Page from 2000.

2. How to capture context about data

Metadata provides context about an item (event, place, person, or object) that we capture because it is important to understanding why the item is interesting in the first place. Contextual data gives meaning to and unlocks understanding of the event, place, person or object. But how to capture this metadata and where to persist it isn't obvious. For example, we keep personal notes on friends that include reminders about what they like to eat or are allergic to, the names of their kids, and important events in their lives. You might be thinking, why not store that in Outlook or Gmail contacts, and you'd be correct. But what about personal notes on books read? Or, thoughts about a special dinner, an epic hike, or a great concert? Where can we store all of these notes - context - consistently and in one place whether it's about an event, place, person, or object? Consistent management of contextual information (metadata) is one of the scenarios we set out to address in Scrapbook.

In the photos of pages from our physical scrapbooks, written notes can be seen around the pasted objects. These are examples of 'metadata' that provide necessary context.

3. How to find relevant information quickly

It's ironic that using Bing or Google we can call up within seconds all the details of a celebrity. But what about someone we actually know or care about? We know what you're thinking: just go to Facebook and look them up. Yes, that sort of works if the person in question is even using Facebook. However, here we are talking about information that matters to us, which is information about someone that you probably wouldn't find in a social media profile or wouldn't even appear in Facebook. It's information that's gleaned by spending time with someone, in person, in the context of a direct relationship. How to access this kind of context quickly and on our terms intrigued us and became a guiding principle for Scrapbook.

We use people data here as an example, but our argument applies to all types of information, be it events, places, or objects. In a way, Scrapbook is a small private search engine customized for our data that can be searched quickly. How quickly? Less than 10 seconds in most cases. That might not sound that great at first, but think about that filing cabinet of over-stuffed folders. How long would it take to find something in there? Or navigating a half dozen different apps or web sites to find what you are looking for. With that in mind, 10 seconds isn't very bad at all.

4. How to own our information

Fundamentally, we don't have much trust in many of the platforms we've mentioned above, especially the current flavors of social network services. We are not keen on having our personal information exploited by algorithms to sell us products or filter our news. Yes, we tolerate this to a degree, but absolutely not for the broader categories of information we have in mind here. And while we acknowledge that these services are fun and constitute an important social component for many, it seems crazy to us that people spend time at all creating detailed personal timelines that generate ad sales for someone else.

We’re also not confident in the longevity of these services and platforms. Five years is a long time and ten years an eternity in the technology sector. MySpace, for example is a distant memory. Facebook is already regarded as passe, with internet newbies flocking now to Snapchat. Smaller platforms come and go in a relative twinkling. Returning for a moment to the concept of an archive, we’re thinking long term.

From the start, Scrapbook was envisioned as way we could maintain as much control as possible of our own data, and really, the story of our lives, while making it accessible in a secure, sustainable manner. That desire led us down the avenue of developing our own solutions. It's not an easy road, but it's been rewarding. We maintain that taking ownership of our data has given us a greater understanding and appreciation of what constitutes us data-wise, of the information we return to more often, and which data is important enough to save for the future.

Definitions

Some definitions relative to Scrapbook that are used often in this post are:

Scrapbook item

An entry in the Scrapbook collection. Each Scrapbook item always has at minimum a title, category, date, location, assets, and notes field associated with it. In our current implementation, each Scrapbook item is a JSON object within a collection of JSON objects that make up the Scrapbook database. These JSON objects are stored in a document-oriented NoSQL database. The bold terms are represented as fields in the Scrapbook JSON object shown in the image below. A Scrapbook item is also referred to as a Scrapbook entry.

Scrapbook asset

A photo, document, link, web page, or any other digital format that is associated with the Scrapbook item and is stored on disk (locally or in the cloud). The names of the assets are associated with each Scrapbook item in an assets field. Each Scrapbook item has at least one representative photo, which by conventions is named 'Folder.jpg'.

Contextual data

Additional information or metadata about a Scrapbook item that beyond the title, category, date, location, and assets fields. Contextual data goes into the note fields, which are called body and bodyObj. There may be several subfields defined under bodyObj depending on the item category.

Scrapbook data or collection

Refers generically to the all the data managed by Scrapbook, both in the document-oriented NoSQL database and Scrapbook assets storage.

Physical object

One or more physical entities, usually paper, but really might be anything we're interested in capturing. A physical object becomes a Scrapbook item through photography, scanning, and describing or capturing in whatever means possible relevant contextual data about the object. Thus a physical object becomes a Scrapbook item or asset.

Left: A Scrapbook item represented in JSON. Right: Recent Concert category items displayed in a browser.

Left: A Scrapbook item represented in JSON. Right: Recent Concert category items displayed in a browser.

What Scrapbook Isn't

A photo or large document archive

Better tools to do this might be OneDrive, Google Drive, or Dropbox. While Scrapbook does save photos and documents, the emphasis is on saving only those key assets with associated context that relate the story of the Scrapbook item. For example, for a dinner with friends, we might save a group photo, a photo or two of what we ate or drank, or something telling about the evening. Along with the photo, we'll describe who was there, interesting conversation topics, and other bits of information about the event that we may refer to in the future.

A journaling or diary platform

Tools that already do this are Day One, RedNotebook, OneNote, and Evernote to name a few. While Scrapbook deals well with large quantities of textual data, it's best when the context of a Scrapbook item is captured as concisely as possible. A Scrapbook entry about a person, for example, contains only essential contextual information: name, where and how we met, context of the relationship, and perhaps a few descriptive details. If by chance we have a biography or resume of the person, that becomes an accompanying Scrapbook asset.

A contact management tool

Better tools to do this are Outlook, Gmail, and many more. Scrapbook saves bits of information about people that tell a story about who they are and what they mean to us. Sometimes, we include information we wouldn't want to include in a traditional contact management system such as favorite color, ex-spouses, despised or loved foods, things we've done together, or perhaps health events.

Redefining Digital Scrapbooking

Digital scrapbooking as defined by a number of sites today,

Smilebox being one example, is a process whereby you combine photos and text to create a one-time presentation as output. You upload photos, add text, and select a theme. The process returns a "digital scrapbook" for sharing, downloading, or printing. The results is not searchable.

We consider this a weak definition of digital scrapbooking. For us, digital scrapbooking, and Scrapbook specifically, is more than just the output of a singular presentation. Digital scrapbooking is:

- Focused on the collection

- A digital scrapbook is the collection of items or digitized assets themselves.

- You can select a subset or all items and direct them to an output (video, print, etc.), but how those items are consumed are not what constitute a digital scrapbook.

- The collection of items is searchable.

- More than photos

- Any kind of printed material or object that can be scanned or photographed can be included, as can a file of any type or format.

- A physical object, once digitized and recorded as a Scrapbook asset can often be discarded.

- For example, we have Scrapbook items which were "once" physical objects such as awards, memorial plaques, or trinkets. And while it may sound perverse to save only digital representations of such objects, we can tell you that it is extremely liberating to let go of physical stuff this way and retain a compelling representation of it in Scrapbook for later.

- The metadata / context of the digitized object is important. It provides the where, when, what, and why of something, which breathes the life into these digitized assets.

- It's frustrating for us now to stumble upon a Scrapbook asset from our "early" days that doesn't have any contextual metadata. It's frustrating, because the value of having saved the item is greatly diminished without context.

- A shift in perspective and habit

- Storing

assets digitally and sticking with it is a can be significant commitment. It

means that instead of shoving something you want to save into a folder or

drawer, you take a moment or two instead to scan or photograph it, then to make

an entry in the Scrapbook system. And perhaps then throw the original away, giving

up the comfort and security of its physical presence.

- Today, more and more Scrapbook items arrive digitized anyway. We find now that our focus is less on the input task (scanning/photographing), and more on managing, curating, and researching ways to use query Scrapbook.

- Discipline is key. You should think about what your future self, 30+ years from now, might be looking for in Scrapbook. More on this idea is discussed in the What We've Learned section.

What's in a Name?

We chose the name Scrapbook because it invokes the physical scrapbooks we were maintaining up to the time we went digital. The name is useful if not a little unfortunate in that it conjures an image of scraps, haphazardly pasted into a big binder. To avoid that association, we may change the name to MyJournal or MyDiary. With Cortana digital assistant (explained below), we already reference Scrapbook as "My Journal" because it's easier to pronounce and easier for Cortana to recognize.

Scrapbook History and Milestones

Our Scrapbook has been over 15 years in the making. Its evolution can best be described as sporadic with significant inflection points described below. We tend to create a version of Scrapbook that we use for a long period of time before thinking about and implementing the next version.

- 2003:

Origins - Scrapbook v1

- Transition

from physical scrapbooking to digital scrapbooking.

- Establish

basic data schema, understand which data makes sense to collect and over which categories.

- Each

Scrapbook item has a unique number generated sequentially.

- Design

choices which crystallized at this time include naming asset folders YYYY-MM-DD, organized in a "normal" folder hierarchy for compatibility and traversability within any typical file system.

- Assets

associated with each Scrapbook item are stored on a physical hard drive.

- The service is hosted on a local server, at this point under a desk at home.

- Metadata is maintained in an XML flat file. While this approach is not elegant, it's simple.

- The interface

to the Scrapbook collection is via ASP.NET pages.

- There is support

for only single-user edit access because the XML flat file is cached in local web

server (IIS) memory (yikes!). Multiple users viewing is supported.

- Support

for basic CRUD operating on XML flat file database.

- Data entry is cumbersome.

- 2009/2010:

Ajax - Scrapbook v2

- Size:

3,000 Scrapbook items in over 30 categories.

- Continue to add to the collection. By now almost all of our old physical records,

printed material that *was* in folders and cabinets has been scanned and

entered into Scrapbook.

- Still lacks interesting query methods.

- Focus

on a new front end using JavaScript, jQuery, ASP.NET, C#, LINQ, and web services to

deal with cumbersome data entry.

- Still based on the same basic design in terms of single-user access and an IIS in-memory database with

CRUD.

- Experiment

with different ways to access data from mobile, different layout

paradigms; main use continues to be through browser.

- Lack of context and connections between entries.

- 2012:

Cloud - Scrapbook v3

- Size: 4000 items.

- The

XML file "database" is 6 MB.

- Host

the Scrapbook application into the cloud. Host website/front end in Azure

cloud, not on-premises.

- Host

assets in Azure blob storage, syncing from blob to local hard drive to maintain "visibility" of the data in a local file system.

- Refine the look and feel, but otherwise still using the same technology stack: XML flat file in web

server memory, jQuery, ASP.NET, C#, LINQ, and web services.

- Using CloudBerry tool to synchronize assets between blob storage and a local file

system.

- 2015:

OneDrive - Scrapbook v3.1

- Size:

5000 items.

- OneDrive is now mature enough such that Scrapbook asset storage (Azure blob storage) is

synced to OneDrive instead of a local file system.

- Devices

(computer, phone) can now sync to OneDrive to provide local views of Scrapbook assets.

- 2017:

MVC, Document DB - Scrapbook v4

- Size: 5700

items over 25 categories.

- Scrapbook

assets (photos, documents, etc.): 30 GB

- Replace

complicated set of ASP.NET pages and web services with MVC 5 design.

- Start with the ASP.NET

MVC ToDo List and customize the heck out of it. Here are the instructions

we started with.

- Replaced

all functionality dealing with XML with JSON.

- Replace

XML flat file in-memory solution with NoSQL solution with a cloud-hosted

document data structure (Azure Cosmos Document DB)

- Single-user user requirement removed. Now, Scrapbook is fully multi-user.

- Scalability

significantly increased.

- Data

schema now JSON-based:

- Add

geocode and friendly location name fields for each Scrapbook item.

- Track

update and modified times of each Scrapbook item.

- Implement

two-level schema:

- Level

1 (top-level) fields apply to all items, e.g., title, location, date,

category.

- Level

2 (second-level) fields depend on category of item, e.g., for a

Scrapbook Book item there are author and synopsis fields while for a

Scrapbook Hike item there are length, duration, and elevation fields.

Made possible because each document in the datastore need not have same

number of fields.

- Assets

belonging to Scrapbook items (e.g., images, documents) are tracked as

part of each document.

- Scrapbook

items now have full-fledged GUIDs.

- Reduced

and reorganized categories, in particular, with level 2 fields.

- Front

end remains in cloud (Azure app service).

- Implement

Windows Live login to authenticate users and a custom solution to authorize

authenticated users.

- Backing

store for assets continues to be Azure blob storage with sync to OneDrive.

- LINQ

still a critical part of query in MVC design.

- Cosmos DB Document DB allows for many more consumers, notably, intelligence services such as the Azure bot framework, and LUIS for natural language parsing.

- Scrapbook

is accessible now via multiple channels including Skype, Cortana, and embedded

web chat using natural language with rich media output including voice.

- Primary editorial interface is via web pages (MVC).

- 2018: Scrapbook101 - a simplified, open-source version of Scrapbook developed on .NET Framework and .NET Core. For more information, see the GitHub technical documentation and code for running on the .NET Framework or .NET Core.

- See the subsequent posts about Scrapbook given in the intro to see recent work.

Working with Scrapbook

We went out of our way in the

What is Scrapbook? section to define Scrapbook as being about a collection of context and assets, and the design and curation of that collection. That's true. But it's also true that what makes our Scrapbook stand out is how we now consume the data stored in it and utilize it in our daily lives. In this section, we'll talk about different ways we use Scrapbook data.

In the

Scrapbook History and Milestones section, we summarize technology behind our implementation of Scrapbook, and while Scrapbook from its initial implementation has had the core functionality of a database with a web front end, it was the 2017 implementation of Scrapbook that opened the door to many more possibilities for working with Scrapbook data. Prior to 2017, Scrapbook was a XML flat file hosted in-memory. Though it supported multiple users viewing data, it only allowed single-user data entry via a web interface. Furthermore, it was not practical to share Scrapbook data outside of the web site context unless we passed around an XML file, which would always be out of sync.

In 2017, the key change we implemented was to port Scrapbook into a NoSQL database solution and to reorganize the collection. Specifically, we use a

NoSQL document store provided by

Microsoft Azure Cosmos DB, although other services could work just as well. More precisely, the Scrapbook collection is a document-oriented database where each document is encoded in JSON. Each chunk of JSON (a document) represents one Scrapbook item.

Document stores such as Azure Cosmos DB are schema-less databases which enable rapid development by allowing for data models not confined to a strict schema. Each JSON document in a document store can have a different structure. In practice, we have limited the amount of variation. We currently have two types of JSON documents identified by a type field: a Scrapbook category document and a Scrapbook item document type. The latter make up 99.9% of the documents. A category document exists to track enumerate enforced categories, category synonyms, and the specific fields associated with each category (level 2 structure). Within Scrapbook item JSON documents, the schema depends on the category selected. We call this level 2 structure. Level 1 JSON schema includes the fields (title, location, etc.) that always apply regardless of category of the Scrapbook item.

The NoSQL approach immediately opened up Scrapbook to scalable, multi-user access, that is, multiple users performing CRUD (create, read, update, and delete) operations. Furthermore, the NoSQL approach hosted in the cloud allowed us to more easily and flexibly leverage Scrapbook data, which we discuss next.

An important development that we are exploiting is the recent availability of a new breed of Artificial Intelligence (AI) based tools. Microsoft and others are making advanced analytics and big data processing tools available for users that just a few years ago were not possible. Specifically, in the Microsoft ecosystem, we are using tools which fall under several rubrics, which may be confusing at first glance but all convey the same basic message which is that Microsoft is trying to democratize the use of artificial intelligence and make the technology available to everyone. That's a good thing.

What this means for us is that we are able to create a personal

digital assistant that is very personal,

flexible,

and powerful by leveraging and customizing the technologies underlying Cortana.

By coupling the richness of the information we’ve amassed in Scrapbook with the

intelligence services that power experiences such as Google Now, Cortana, Alexa or

Siri, we’ve realized a much more interesting and impactful experience.

Here are some of the key parts of the Microsoft

ecosystem we are currently leveraging.

Bot

Framework

This framework

allows us to build apps that respond to natural language queries. The bot

framework is consumed by multiple channels including Cortana and Skype.

Microsoft

Cognitive Services (MCS)

Bing Speech API is

used for Windows applications including Cortana, a personal assistant platform we leverage for spoken, natural

language queries to access Scrapbook.

Language

Understanding Intelligent Services (LUIS) takes sentences sent to it by a bot

channel (such as Web Chat, Cortana or a Skype bot) and interprets them by extracting the

intentions they convey and the key entities that are present.

Cortana

Intelligence Suite

The suite is a

marketing term which is about the orchestration all Microsoft technologies

(including the ones mentioned above) used together to create end-to-end

intelligent solutions, typically involving Microsoft Azure services and

Microsoft Machine Learning Studio. The potential of the suite is best understood by looking at the gallery of possible

solutions, which shows common patterns and practices.

A challenge of these AI-centric tools is that

they are, at least currently, rarely applied for personal use. Most of the

demos and examples are for solving business cases like predicting sales or

demand, detecting faults or anomalies, judging customer sentiment, or creating

recommendations. Trying to use them with Scrapbook, in a personal data context,

is an interesting challenge that we discuss more in the

Future Directions section.

Some of the resources that we currently

leverage in our current Scrapbook implementation are listed below. Each resource is color coded for easier reference later when we revisit example scenarios.

Web interface

User access: In a browser,

navigate to Scrapbook URL, authenticate with a Windows Live account, and

authorize with a custom authorization system.

From the inception

of Scrapbook, access via a Web interface i.e.,

a web browser interacting with a hosted web site, has been the primary modality for access. The current ASP.NET MVC site allows for CRUD operations as well as other

custom pages.

Bot Framework: Skype, Cortana,

and Web Chat

User access: One can use

Skype,

Cortana, and

Web Chat as you would normally (from any device), however the user's

Windows Live account must be granted access to use the bot via any of these

channels.

If you had asked us a year ago to imagine talking to a bot which interacts with our data collection, we

wouldn't have been able to do it. In the space of a few months and some

development work, we were able to implement natural language interfaces to query information which means something to us, verbally via

Cortana and via messaging (

Skype or

Web Chat). The power

to leverage natural language unlocks our data, and that's incredible. A big part of

this leap forward is the Microsoft Bot Framework which provides an underlying dialog structure, as

well as the

Language Understanding Intelligent

Service

(LUIS) API, which facilitates language parsing. In particular, LUIS enables us to send "utterances", the things we say or write in a bot conversation, to the LUIS API

and based on training via the application, receive scored intentions and key entities. Examples of intentions are

find,

list,

describe,

read, and

show. Examples of entities are

category,

date

range, and

location. We see a great deal of opportunity in the use of bots and natural language as discussed in the

Future Directions section.

Azure Portal

User access: Navigate to the

Azure

portal via browser and authenticate against our Windows Live accounts, which must have either administrator privilege or have been granted, at minimum, read access to access the Scrapbook Cosmos DocumentDB instance containing the

Scrapbook data.

Key components: NoSQL document

store, SQLite query language

We include the

Azure portal here as a "tool" because we in fact do some data analysis and

grooming there. We never use the portal for entering new Scrapbook items. It

is better to use the web interface for data entry because it implements all the necessary prompts and controls to ensure the integrity of data added or edited within the collection.

In the portal,

there are various ways to issue queries against the document store using a

"

SQL-like

query language

with additional syntax features to handle JSON data types"[

ref]. To see it in

action, check out this

playground.

The Cosmos DB

document store is of course accessible via an SDK or REST endpoint. Access is mediated by a URI endpoint and access key. Both the

Web Chat and bot framework applications leverage the SDK.

We also access the collection via the

Azure

Cosmos DB Data Migration tool, which can move data in or out of Cosmos DB; via ODBC (Excel); or with the Azure Machine Learning studio as discussed below.

Azure Machine Learning Studio

User access: Navigate via a browser to the

Microsoft

Azure Machine Learning Studio, and authenticate using your Windows Live account. Provide the appropriate URI and key to access the Scrapbook Cosmos DB

instance.

Scrapbook data

(stored in Cosmos DB) can be imported into Azure ML Studio and analyzed to achieve various interesting objectives. ML Studio facilitates data transformations, for example flattening or exporting all of the collection into CSV format, or processing text fields with various standard algorithms,

passing data into a Jupyter notebook for analysis in R or Python. We are only

in the most rudimentary stages of the capabilities available here. Some of our ideas for future

exploration include sentiment analysis (via text processing) or category prediction from title (regression analysis), image analysis (metadata extraction), and detecting and potentially automatic insertion of missing

data (via clustering analysis).

Excel

Access: ODBC Driver with URI endpoint and access keys.

Technology: Desktop or online

versions of Excel.

With a special

ODBC

driver,

you can load data directly from Cosmos DB, or you can load a CSV file which has been output

from Azure ML Studio or the Migration Tool. Either way, you have data you can work with in Excel using pivot tables, analysis tools, as well as quick and easy graphing capabilities.

It's interesting, for example, to create histograms or

tree maps of category counts to for an intuitive visual representation of the item collection in

Scrapbook.

Searching

Let's discuss search, which as we've said, is fundamental to the utility of Scrapbook. Scrapbook items, their associated

contextual data, and assets are only useful if we can find them.

Most of our queries to Scrapbook, be they using our natural language bot or MVC web application interfaces, come down in the end to a SQL

query. In our C# code, we use in some cases Language Integrated Query (LINQ) to

construct SQL queries, in other cases (primarily the bot interface), we construct DocumentDB SQL queries directly in code. In the following examples, the bolded items correspond

to a JSON string value in the Scrapbook schema. This isn't meant to be a thorough

code review of how we search, just a few select examples to illustrate how we've implemented search in our applications.

Web interface

The following code was captured using the debugger in

Visual Studio (MVC 5/C#) of a query for the category Books with the word

"science" in the title.

{https: //ENDPOINT/dbs/DATABASE_NAME/colls/COLLECTION_NAME

.Where(f => (((True AndAlso f.Category.ToLower()

.Equals(value(ScrapbookMVC.Controllers.ItemController+<>c__DisplayClass11_1)

.category.ToLower())) AndAlso (f.Type == "scrapbookItem"))

AndAlso ((False OrElse f.Title.ToLower()

.Contains(value(ScrapbookMVC.Controllers.ItemController+<>c__DisplayClass11_0).term))

OrElse f.Body.ToLower()

.Contains(value(ScrapbookMVC.Controllers.ItemController+<>c__DisplayClass11_0).term))))

.OrderByDescending(c => c.DateAdded).Take(40)}

This is the LINQ lambda expression generated after

creating a LINQ expression.

Expression

trees

allow us to build runtime queries. The

important thing to note here is that however we use LINQ (directly expression

or expression tree), it is ultimately converted into the following SQL query made

against Cosmos DB.

{{"query":"SELECT TOP 40 * FROM root

WHERE (((true AND (LOWER(root[\"category\"]) = \"books\"))

AND (root[\"type\"] = \"scrapbookItem\"))

AND ((false OR CONTAINS(LOWER(root[\"title\"]), \"science\"))

OR CONTAINS(LOWER(root[\"body\"]), \"science\")))

ORDER BY root[\"dateAdded\"] DESC "}}

A couple of notes:

· "root" in the SQL query refers to the

collection itself.

· In the C# LINQ expression, some bolded items

start with an uppercase letter (Title instead of title) because

in code we follow the standard C# naming conventions.

· In the C# LINQ expression, ENDPOINT,

DATABASE_NAME, and COLLECTION_NAME are replaced with their "real"

values.

Bot Framework

This is an example of a SQL query generated in our C# code from a natural language query "Show me all concerts we

went to near Bergamo last year."

"SELECT TOP 200 c.id, c.title, c.category FROM c

WHERE c.type = \"scrapbookItem\" AND CONTAINS(LOWER(c.category), \"performances\")

AND ST_DISTANCE(c.geoLocation, {'type': 'Point', 'coordinates':[9.66950988769531, 45.6952285766602]}) < 10000

AND c.idFolder >= \"2016-01-01\" AND c.idFolder <= \"2017-01-01\"

ORDER BY c.idFolder ASC"

In our Bot Framework code, the SQL queries are

assembled

piece by piece in code modules each written to extract various query parameters

parsed from a user’s natural language ‘utterance’ or query. Our code currently

parses and translates into document DB SQL query syntax – scrapbook category or

sub-category, date and date range, geographic location or region (geo-point,

geo-polygon), text string search, entity type, the desired number of, or

ordinal results to be returned, in what order, and mode of presentation.

Azure Portal

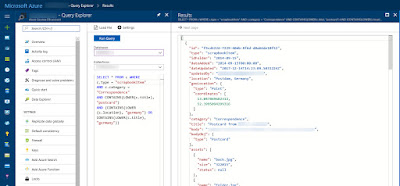

This is a SQL query to find Scrapbook items in

category Correspondence that concerns a postcard from Australia.

SELECT * FROM c

WHERE c.type = "scrapbookItem" AND c.category = "Correspondence"

AND CONTAINS(LOWER(c.title), "postcard")

AND (CONTAINS(LOWER(c.location), "australia")

OR CONTAINS(LOWER(c.title), "australia"))

In fact, all of the queries shown here above can

be used within the portal.

Azure Machine Learning Studio

This is a SQLite query to select only a few

columns from Scrapbook and use them for analysis. This code would be inside an

Apply

SQL Transformation module.

SELCT title,

category,

DATETIME(SUBSTR(idFolder, 1, 10)) AS dt,

SUBSTR(idFolder, 1, 4) AS year

FROM t1;

Natural Language Queries

Speaking is natural to us, so why not use it to find out about things you care

about? We can speak to Cortana or we simply send a message via our bot, via Skype or a Web Chat, for example. For purposes of demonstration

here – it's easier to capture the output from a one of our bot channels – in this case our Web Chat interface. But everything you see typed in the screenshots below can be requested of Cortana.

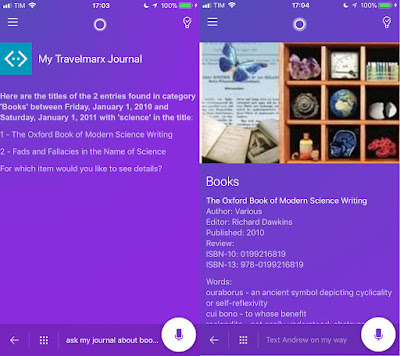

Three examples of using a bot to access Scrapbook using natural language queries.

Three examples of using a bot to access Scrapbook using natural language queries.

Here are a few common interactions with

Scrapbook and the corresponding phrases we use invoke them:

Help

- "What can I do?", "Help"

- "Reset"

- "Show me the category list", "List categories"

- "Describe category Books"

Show/Count:

- "Show me the last 3 concerts I went to"

- "How many hikes did we do in 2013?"

- "Show assets", "Show images for item 2"

Search:

- "Tell me about the last hike we did within 50 km of Seattle"

- "Show me everything we did in August"

- "Show me Correspondence of type postcard from Germany with Karl in the title in 2014"

Drill Down/Change Parameters:

- "Show me people from Seattle" followed by "what about in Bergamo"

- "How many hikes did we do in 2013?" followed by "next page" or "page 4"

- "Give me the details"

Cortana-specific:

- "Read it to me", "Read me the second one"

All of these natural language queries are made

possible by the Microsoft

Language

Understanding Intelligent Service (LUIS) API. We use a few built-in entities in

LUIS such as number, ordinal, and ate-range, but most of the entities we defined and trained ourselves. One thing we found useful in working with natural

language queries was to create list of synonyms for each category. For example, queries

about Scrapbook items in our category Hikes, can be asked using the synonyms "walks",

"treks", "trekking", "hiking", and "backpacking".

These are all interpreted in our bot code via category list look-up to be query in category 'Hikes'.

Scenarios Revisited

In the

Introduction and Motivation section, we listed scenarios that guided the development of Scrapbook. In this section, we revisit those scenarios to illustrate how they are addressed in the various ways we consume information via our Scrapbook solution. All communications to and from Scrapbook and supporting services are encrypted, using an authorized, authenticated account.

Let's take a look at the scenarios we discussed before and how we address them with Scrapbook.

Scenario 1: We are about to walk into a friend's house. What are the names of her three children?

- Search over category = People

with title containing friend's name.

- Skype dialog:

- "Show me people with Christina

in the title"

- "details"

Scenario 2: We remember a wonderful hot chocolate we had in Cuneo. What was the name of the café? What was the name of any place we ate at in Cuneo?

- Search over category =

Restaurant and location = Cuneo.

- Skype dialog:

- "Show me all restaurants in

Cuneo"

- "Details of 3"

Left: Screenshot for Scenario 1 – Skype bot people search. Right: Screenshot for Scenario 2 - restaurant search.

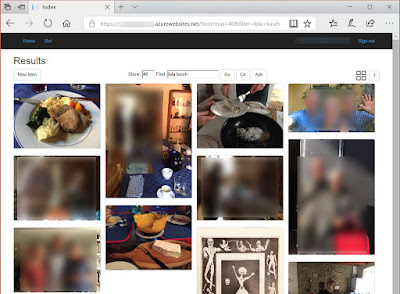

Scenario 3: We

are planning to to have a friend over for lunch and we want to prepare a dish we haven’t served her

before and obviously want it to be something she’ll enjoy, so we review meals

we’ve had with her in the past.

- Using the Scrapbook Web interface, search over category

= Events with title containing friend's name. OR, just title containing friend's name and

"lunch".

Left: Scenario 3 - Using the web interface to find an event. Right: Scenario 3 -View of recent dinner photos.

Left: Scenario 3 - Using the web interface to find an event. Right: Scenario 3 -View of recent dinner photos.

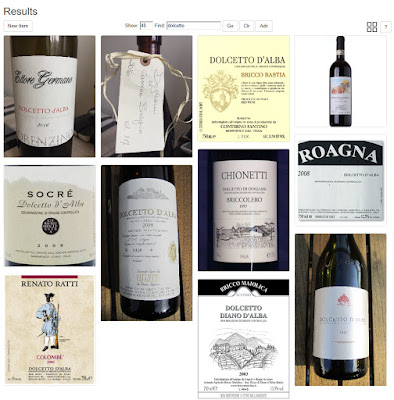

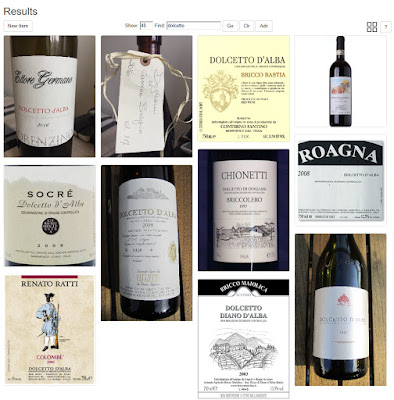

Scenario 4: We are planning a dinner with friends and we are looking for an interesting red wine to serve. We remember that we drank some nice dolcettos from Piedmont last year. What were they?

- Using the Scrapbook Web interface, search over category

= Wine with title containing "dolcetto".

Left: Scenario 4 - Using the web interface to locate a type of wine. Right: Scenario 4 - View of wine labels for type dolcetto.

Left: Scenario 4 - Using the web interface to locate a type of wine. Right: Scenario 4 - View of wine labels for type dolcetto.

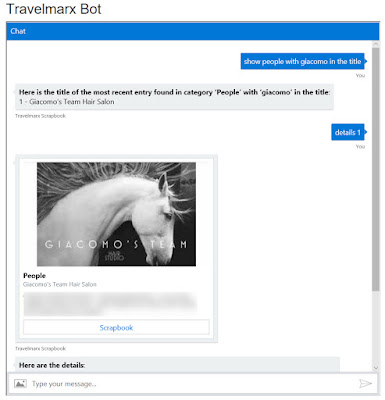

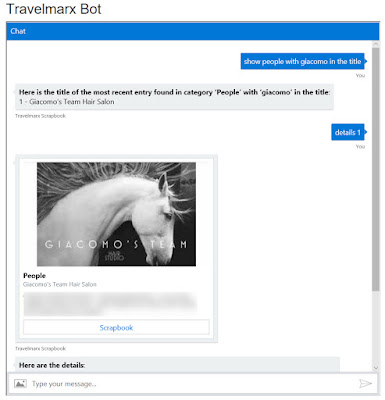

Scenario 5: We are about to call to make an appointment for a haircut. What are the names of the people that work at the salon so whoever answers the phone we;re able to address them by name?

- Search over category = People

with title containing coffee shop's name.

- Web Chat dialog:

- "Show

me all people with Giacomo in the title"

- "Details 1"

Scenario 6: We are wondering about an upcoming wedding we have been invited to and we want to pull up the invitation to confirm the date and protocol for gifts.

- Search over category = People

with date = this year and title containing "invitation".

- Web Chat dialog

- "Show

me all correspondence this year with invitation in the title"

- "Details”

Left: Scenario 5 - Web Chat with bot searching over the people category. Right: Scenario 6 - Web Chat with bot searching over correspondence category.

Left: Scenario 5 - Web Chat with bot searching over the people category. Right: Scenario 6 - Web Chat with bot searching over correspondence category.

Scenario 7: We are writing an email to a friend and we want to recommend the last hike we did in Piedmont. What were the details of that hike?

- Search over category = Hikes

with date range set to include the past few months.

- Cortana [Desktop] query

dialog

- "Ask My Journal to tell me

about the last hike we did in Piedmont."

- We use

"My Journal" with Cortana because we found it to be easier to

understand than "Scrapbook".

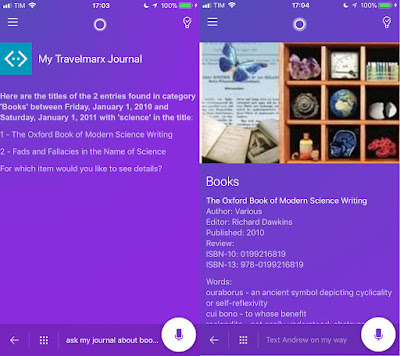

Scenario 8: We

remember reading an interesting book in 2010 that had "science" in

the title. What was it?

- Search over category = Books

with date set to 2010.

- Cortana [Mobile/iPhone] query dialog:

- "Ask My Journal about books we

read in 2010 with science in the title."

- "Give me details for the first one."

Left: Scenario 7 - Windows 10 Desktop Cortana searching Scrapbook for hikes. Right: Scenario 8 - Cortana on iPhone searching Scrapbook for books.

Left: Scenario 7 - Windows 10 Desktop Cortana searching Scrapbook for hikes. Right: Scenario 8 - Cortana on iPhone searching Scrapbook for books.

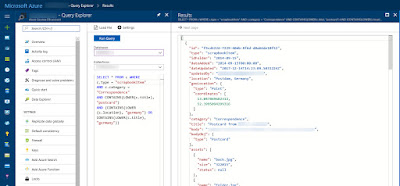

Scenario 9: We want to know if we've ever received a postcard from Germany.

- Search over category = Correspondence with

title or location containing Germany.

- In the Azure

portal go to Query Explorer and use the following:

SELECT

* FROM c WHERE c.type = "scrapbookItem"

AND

c.category = "Correspondence"AND CONTAINS(LOWER(c.title),

"postcard")

AND

(CONTAINS(LOWER(c.location), "germany") OR CONTAINS(LOWER(c.title),

"germany"))

Scenario 9 - Using the Azure Portal over Cosmos DB to execute queries and find Scrapbook items.

Scenario 9 - Using the Azure Portal over Cosmos DB to execute queries and find Scrapbook items.

Scenario 10: We want to know the average cocoa fat percentage of chocolate we tend to buy and what, if any, is the correlation between percentage and how we rate it.

- In Azure

Machine Learning studio, import our Cosmos DB Scrapbook data and

search over category = Chocolate.

- View histogram of percentage and

check out a scatter plot of percentage against rating.

- Query for selecting data:

SELECT

c.title, c.bodyObj.percentage, c.bodyObj.rating

FROM

travelmarx AS c

WHERE

c.type = "scrapbookItem" AND c.category = "Chocolate"

Scenario 10 - Using Scrapbook data in Azure Machine Learning Studio to analyze chocolate habits!

Scenario 10 - Using Scrapbook data in Azure Machine Learning Studio to analyze chocolate habits!

Scenario 11: We want to understand which categories in Scrapbook have the most items as well as how many items there are per year over the last 15 years.

-

In Excel, import Cosmos DB Scrapbook data (using ODBC driver), create a

pivot table, then a tree map of the data, and finally a histogram of items per year.

Left: Scrapbook data analyzed in Excel and visualized as a treemap chart. Largest category is Correspondence. Right: Scrapbook category counts per year analyzed in Excel.

Left: Scrapbook data analyzed in Excel and visualized as a treemap chart. Largest category is Correspondence. Right: Scrapbook category counts per year analyzed in Excel.

We

believe that the four primary objectives we wanted to address with Scrapbook - manage archival data, provide context

around data, making our data searchable, and owning our data - are well

illustrated in these scenario examples.

We

acknowledge that it’s been a lot of work to reach this point – many hours of

data entry, curation, planning and programming to assemble the machinery around

Scrapbook. But we’ve achieved the objectives we set out to satisfy, and now have

a personal information platform that works very well and is positioned to

evolve and adapt as our needs and available technologies change over time.

What We've Learned

We've

learned a few things in 15 or so years of curating Scrapbook that we'll share here. Even the guiding principles around

Scrapbook took years to come in focus.

Scrapbook guiding principles:

We own our data.

We use different services and platforms to "make" Scrapbook, but we control to what ends our data is used.

Data should always have context.

The usefulness of Scrapbook item is diminished without context. We always include something descriptive about a

Scrapbook entry. Did we like it? Who was there? Impressions? Names of people or

things? Anything can provide context as long it conveys why the item even

exists in Scrapbook. We've found that including visual context is of particular importance; therefore we always include at least

one image with each Scrapbook item.

Scrapbook assets should be accessible via a file

system metaphor.

What you say!? This

may seem a bit old school, but we feel strongly that even with storage technology advances (be in on-premise, cloud or hybrid), maintaining an intuitive organization of Scrapbook assets via a hierarchical file system metaphor is important. ‘Human

readability’ is important because technology shifts are typically disruptive,

and while we hope that we’ll always have the ability to migrate gracefully from

one Scrapbook implementation to the next, there’s always the possibility that a

service or platform we rely on fails in such a way that a ‘manual’ intervention

is required. Scrapbook item context is currently maintained in a NoSQL database,

separate from the assets. But those records, being JSON, are reasonably human

readable. Furthermore, each asset

reference in a record maps to our ‘file system’ organization, and asset names

remain descriptive rather than just a GUID. So it’s not unreasonable that even

in the event of a complete system failure, our ability to access our

information and to reconstruct Scrapbook, if only from the raw data in backups,

remains preserved.

Portability: avoid technology lock-in.

Leveraging the latest

and greatest technology stacks is fine, but we always keep in mind what it

would take to change. Taking a longer term view on the order of decades, we expect that Scrapbook will evolve and shift between technology

stacks and providers.

Digital scrapbooking is a deliberate choice.

It's one thing to

collect stuff, assets in our vernacular, casually or by circumstance, and it's another thing to organize them such that they're discoverable. It takes deliberate effort, sometimes a lot of effort to consistently capture and categorize the key moments, objects, and activities occurring day to day. We've had to learn to be okay with

letting the "physical" go. Old letters, postcards, and mementos can

be appreciated as much in their digital forms. This process is already much easier now than when we began, and we anticipate becoming much easier still in the future.

Following are some additional observations:

- Garbage in, garbage out. The quality of the data going into Scrapbook is fundamental to the quality and utility of its output.

- Data curation is an ongoing process. We consider Scrapbook entities to be living and

changing. If we have new information, context, or assets for an existing Scrapbook item, we'll update it. We aren't afraid to prune or combine data as needed. To periodically review and curate older data

is a valuable exercise because it provides an opportunity to normalize certain fields or information whose treatment or purpose has changed or evolved. Often, curating data informs the design and eventual implementation of a new feature or capability. And finally, to revisit existing information is valuable in itself.

- Data tends to arrive in batches. We aren't as consistent as we would like to be so there are sometimes time gaps in our data entry. That turns out to be okay, because over time, "clumps" even out. Consistency is important in the long run.

- The value of a

Scrapbook entry is directly proportional to the contextual information

associated with it. A date, location, category, and title

fields are a good start for a Scrapbook entry. But it's more than that. It's

the why, how, who, and other details depending on category that help flesh out an entry. It's frustrating to look up an item from 10 years ago to find only a terse sentence describing it. It's easiest to create entries when the events or activities are still fresh in your mind, and any details you'd want to include are readily available.

- We maintain some statistics

on our Scrapbook collection. For example, how many items do we tend to enter per year and in which categories and how many Scrapbook items are missing data in particular fields. We use this information to refine how the collection is organized and to improve the scrapbook process.

- It helps to be consistent when creating entries. Doing so greatly improves the future usefulness of the data, and later operations on the data (e.g., programmatically or manually) will be much easier.

- It has been important for us to work with our data for a while before we’ve understood how we wanted to structure (or restructure) our schema. Yes, many pitfalls are avoided with good planning and careful design up front, but not all scenarios are foreseeable. We continue to identify opportunities to improve our data structures, and correct as we go. Fortunately, our current architecture permits us to do this relatively easily. We always expect that at some point in the future, we’ll migrate to yet another platform which will suggest entirely new data structure.

- We take a scenario-based approach when designing Scrapbook functionality. For example, our Scrapbook has

information about books we've read, so we consider the type of book-related questions that a user (or we) might ask like 1) the title of the last book read, 2) the

subject of the last book read, 3) the number of books read during a given time

period, 4) favorite books read, or 5) books about a certain subject. With these questions in mind, we can design for the information we need to collect to

support a book Scrapbook entry.

Future Directions

The move to a NoSQL document database was a key

change to Scrapbook which has made our data consumable in a number of new and interesting

ways, in particular with AI-based tools, which are becoming increasingly more

accessible and easier to use. But, we are just at the initial stages. The

following four themes describe areas where we are focusing our development time.

Theme 1: Improved input mechanisms.

We are working to streamline the capture of new

Scrapbook entries. The goal is to be able to create an entry on the fly, as the event is occurring, or has just happened, in the same way you might share a photo or video via a messaging app. This is important because when

we capture data in the moment, we tend to capture more of the essence of it and we avoid the chore of data entry later. (That's not to say later that a Scrapbook entry could not

be edited later.) Therefore, we are looking at tools and processes to make it as quick and easy as possible to create Scrapbook entries. We are

targeting natural language queries via the Bot Framework as one way to do this.

Currently, we use natural language queries to request information from Scrapbook using interfaces such as

Cortana or

Skype. But there's no reason we can't extend our natural language bot interfaces along with AI resources on the back-end to create and populate initial metadata for new Scrapbook entries. For example, the

Computer Vision API, we might extract location, date, faces, and even subjects and themes from a photo shared to the Scrapbook bot via Skype.The notes for the Scrapbook item could be dictated and converted to text using a service such as the

Bing Speech API.

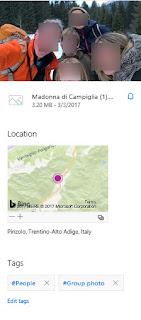

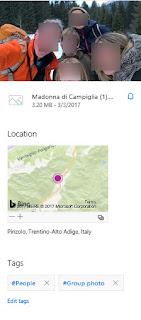

Theme 2: Leverage automated metadata sources.

Photos and

documents in file systems (locally or in the cloud) are increasing augmented with rich auto-generated metadata generated using optical character

recognition (OCR) and image processing algorithms. Below are two examples from

OneDrive. One example shows a photo with text that was extracted, with the OCR

going as far as to recognize a website and to surface that separately. Another

example shows the location data and tag data saved with a photo. Our Scrapbook system doesn't yet attempt to interpret any of this

auto-generated metadata; it still must be be entered in manually. It's an open question as to how much of this we should include in

Scrapbook. We are investigating

Cognitive

Vision Services including the Computer Vision API and the Face API to directly to tag and extract descriptive information from photos.

Theme 3: Do more with geocodes.

|

Example

of Auto-Generated

Metadata - Location and

Image Tagging |

We only recently started saving friendly location

names with each Scrapbook Item. Friendly

names (e.g., Seattle, WA) are geocoded to extract longitude and latitude. The

location data has proven extremely useful for simple questions such as "show me all postcards from France" or "show me all museums we

went to in Rome". We've found that location data is so important that we're updating older Scrapbook items with location.

Besides location sensitive queries, we are

looking at ways to search for and view data via a map interface.

|

| Example

of Auto-Generated Metadata - Extracted Text |

Theme 4: Apply machine learning to Scrapbook.

We've barely begun to scratch the surface in

applying AI-based tools and services to interact with Scrapbook. It's also exciting to think about the

possibilities of using Scrapbook data in machine learning scenarios. We have a lot more work to do here, as even the scenarios in which we'd use machine learning are not yet obvious.

Machine learning at its simplest is about transforming

data into intelligent action. As described in the

Introduction

to Machine Learning page: "[m]achine learning is a data science

technique that allows computers to use existing data to forecast future

behaviors, outcomes, and trends." The steps of turning data into action can be represented by this sequence of questions:

Descriptive: What happened?

· With Scrapbook, we currently have a good handle on this

question. We have categories and we have context to describe "what".

Diagnostic: Why did it happen?

· With Scrapbook (personal data), it's not a relevant

question. Scrapbook items are either something we wanted to happen or made

happen or which happened to us. Perhaps, we can imagine a field in the JSON schema

for Scrapbook items to indicate 1 for a planned or expected and 0 for something unplanned

or unexpected. The 'why' then becomes more interesting.

Predictive: What will happen?

· This is the holy grail of machine learning. In the context of Scrapbook, it's asking if we can make predictions based on our existing data.

But predictions of what? We currently don't have Scrapbook data fields (called features in machine learning) that are easily "predictable", like say the

classic example of predicting an automobile's price given features like the

size of engine, number of doors, and average mpg. Could we use Scrapbook to predict the next book we'll read? Or, friend we'll meet for lunch?

Prescriptive: What should I do?

· It's meaning for Scrapbook is even more abstract

than the predictive analytic question. What could we reasonably expect to be

able to control analyzing existing Scrapbook data?

Over 10 years ago, we started thinking about how

we could put Scrapbook to good use for something relevant to us, rather than

selling us ads or news. Today, we have access to the tools to at least start

this analysis, even as we still have a lot to figure out in terms of what questions we need to be asking and what data we need to be collecting to answer these questions. In Scrapbook's present form, we have

started to investigate some basic machine learning areas such as outlier detection

(items in Scrapbook that are not categorized correctly) or sentiment analysis

(analyzing our notes fields to determine sentiment, positive or negative).

To answer the predictive and prescriptive type of

machine learning questions, we are going to have to re-think about how we are

collecting data and will likely need to collect new types of data. Imagine for example, that each Scrapbook item (book, film, person, event, etc.) has a utility ranking in

terms of how important that item is to us. Then, it's not hard to imagine

utility values could be predicted, and from that, models made to help us

make decisions about which items give us the most utility, therefore giving us prescriptive

guidance. It would be doing with data and machine learning what we already do

intuitively when choosing how to spend our time.